Cerebras Cloud @ Cirrascale

Democratizing High-Performance

AI Compute

The world’s fastest AI accelerator is no longer limited to large national research labs.

Gain access to this powerful system as a cloud service with Cerebras Cloud @ Cirrascale.

A Powerful Cloud Solution for AI Compute

Cerebras Cloud @ Cirrascale is powered by the groundbreaking Cerebras CS-2 system, which is designed to enable fast, flexible training and low-latency datacenter inference. Now, thanks to the partnership between Cerebras and Cirrascale Cloud Services, you can experience it in the cloud.

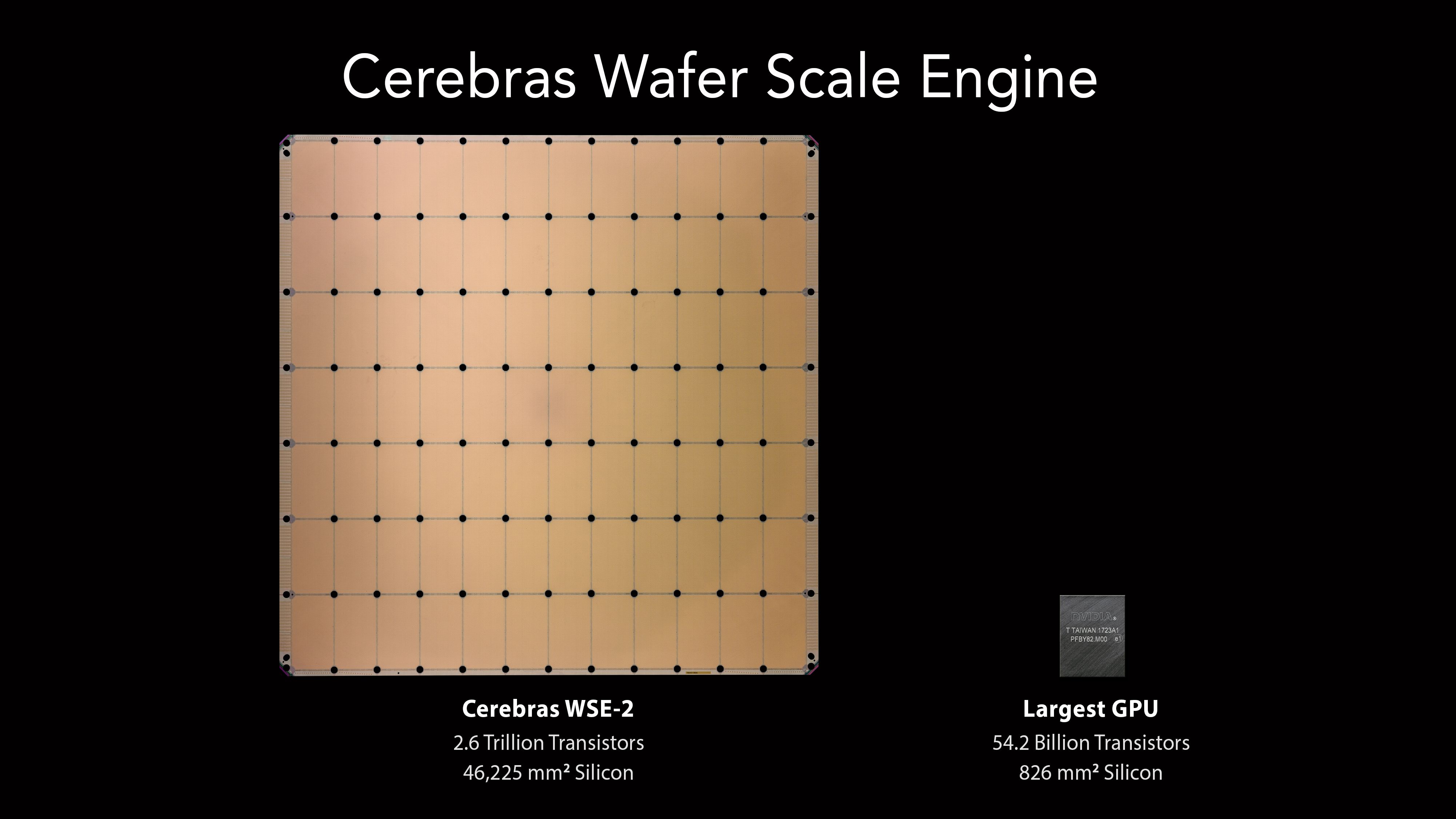

Featuring the 2nd generation Wafer-Scale Engine (WSE-2), the Cerebras CS-2 system has greater compute density, more fast memory, and higher bandwidth interconnect than any other AI solution.

Easily programmable with leading ML frameworks, the CS-2 system helps industry and research organizations unlock cluster-scale AI performance with the simplicity of a single device. Achieve faster time to solution with greater efficiency.

Download Whitepaper

The CS-2 Product overview is a comprehensive look into the Cerebras’ CS-2 motivation, architecture and capabilities.

DOWNLOAD WHITEPAPERWhy is the Cerebras Wafer Scale Engine the Right Solution for AI?

Today’s state-of-the-art models take days or weeks to train. Organizations often need to distribute training across tens, hundreds, even thousands of GPUs to make training times more tractable. These huge clusters of legacy, general-purpose processors are hard to program and bottlenecked by communication and synchronization overheads.

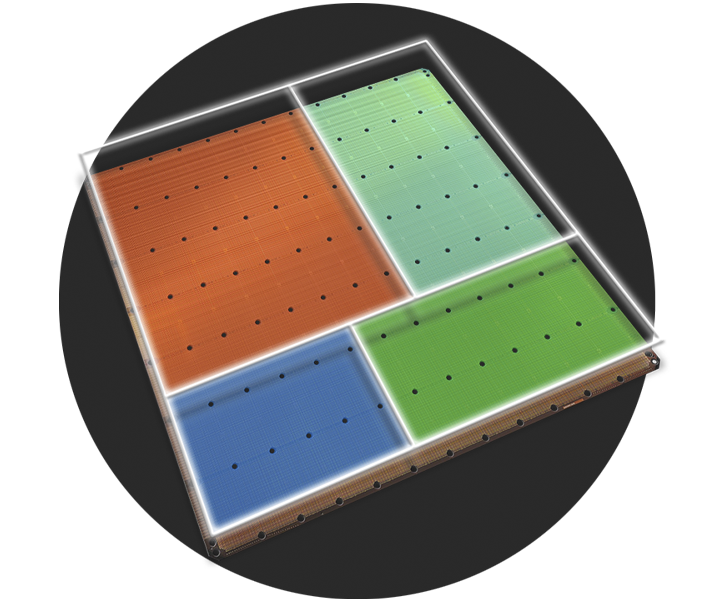

Rather than build a slightly smaller cluster of slightly faster small devices, Cerebras wafer-scale innovation brings the AI compute and memory resources of a cluster onto a single device, making orders-of-magnitude faster training and lower-latency inference easy to use and simple to deploy.

AI Acceleration with No Communication Bottlenecks

The WSE-2 packs 850,000 cores onto a single processor, enabling the CS-2 system to deliver cluster-scale speedup without the communication slowdowns that come from parallelizing work across a massive cluster of devices.

Accessible Performance for Every Organization

One chip in one system means cluster orchestration, synchronization and model tuning are eliminated.. CS-2 makes massive-scale acceleration easy to program for.

Real-time Inference Latencies for Large Models

Keeping compute and memory on chip means extremely low latencies. On the CS-2 system, you can deploy large inference models in a real-time latency budget without quantizing, downsizing, and sacrificing accuracy.

Software That Integrates Seamlessly With Your Workflows

The Cerebras software platform integrates with popular machine learning frameworks like TensorFlow and PyTorch, so researchers can use familiar tools and effortlessly bring their models to the CS-2 system.

No distributed training or parallel computing experience needed. The Cerebras software platform makes massive-scale acceleration easy to program.

A programmable low-level interface allows researchers to extend the platform and develop custom kernels – empowering them to push the limits of ML innovation.

Cerebras Graph Compiler Drives Full Hardware Utilization

The Cerebras Graph Compiler (CGC) automatically translates your neural network to a CS-2 executable.

Every stage of CGC is designed to maximize WSE-2 utilization. Kernels are intelligently sized so that more cores are allocated to more complex work. CGC then generates a placement and routing map , unique for each neural network, to minimize communication latency between adjacent layers.

The Cerebras software platform includes an extensive library of primitives for standard deep learning computations, as well as a familiar C-like interface for developing custom software kernels. A complete suite of debug and profiling tools allows researchers to optimize the platform for their work.

Cerebras Cloud Pricing

Using a Cerebras Cloud instance with Cirrascale ensures no hidden fees with our flat-rate billing model. You pay one price without the worry of fluctuating bills like those at other providers. Pricing shown below is per instance for the total time stated.

| Instance | Specs | Weekly Rate | Monthly Rate | Annual Rate | 3-Year Rate |

|---|---|---|---|---|---|

| CS-2 | 850,000 Optimized Cores 40GB On-Chip SRAM 220Pb/s Interconnect Bandwidth 20PB/s Memory Bandwidth |

$60,000 | $180,000($246.58/hr*) |

$1,650,000($188.36/hr*) |

$3,960,000($150.69/hr*) |

* Cirrascale Cloud Services does not provide servers by the hour. The "hourly equivalent" price is shown as a courtesy for comparison against vendors like AWS, Google Cloud, and Microsoft Azure.

High-Speed and Object Storage Offerings

Cirrascale has partnered with the industry’s top storage vendors to supply our customers with the absolute fastest storage options available. Connected with up to 100Gb Ethernet, our specialized NVMe hot-tier storage offerings deliver the performance needed to eliminate workflow bottlenecks.

| Type | Capacity | Price/GB/Month |

|---|---|---|

| NVMe Hot-Tier Storage | <50TB | $0.40 |

| NVMe Hot-Tier Storage | >50TB | $0.20 |

| Object Storage | <50TB | $0.04 |

| Object Storage | 50TB - 2PB | $0.02 |

| Object Storage | >2PB | CALL |

Ready to use Cerebras Cloud @ Cirrascale?

Ready to take advantage of our flat-rate monthly billing, no ingress/egress data fees, and fast multi-tiered storage with Cerebras Cloud?